By: Lynn Goldstein and Barb Lawler

This month the IAF held a multistakeholder session featuring our research on Demonstrable Assessments for U.S. State Privacy Laws, attended by regulators, academics and business. The objective of the session was to collectively advance the content and process expectations for organizations submitting a Risk or Data Protection Assessment (RDPA) to a U.S. State Regulator.

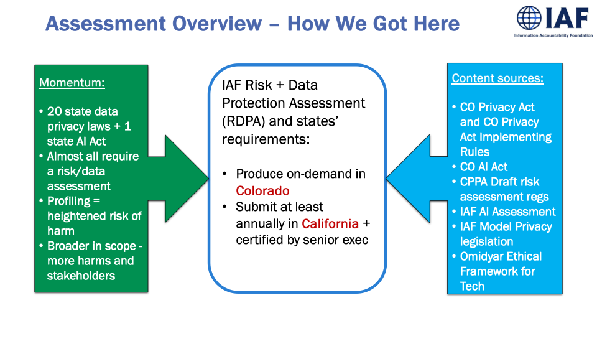

As we see the passage of 20 (and counting) U.S. state privacy laws and one state AI Act, almost all of these states require conducting a RDPA where there is a heightened risk of harm to more than just the individual. These assessments are broader in scope than other impact assessments, such as those expected by the GDPR. Businesses are required to assess more stakeholders, more interests, more benefits, and more risks. Plus, the RDPA is required to be produced on demand in Colorado and provided at least annually in California, and must be signed by senior executives.

The RDPA is based upon the U.S. state privacy laws and rules promulgated and draft regulations proposed thereunder. It also includes information from IAF’s AI Assessment, which was developed in consultation with AI experts and practitioners (Responsible AI) privacy and data protection professionals and based on IAF’s 10+ years of experience in developing big data and complex analytics assessments such as to be used with AI, the IAF’s model legislation, the FAIR and OPEN USE ACT, which is based upon input from IAF strategists and membership. and the IAF’s research paper on multidimensional proportionality, A Principled Approach to Rights and Interests Balancing. Elements from the Omidyar Ethical Framework for Technology and Business Data Ethics were also used.

Participants commented on the importance of reviewing and discussing this project in a multistakeholder environment. We were encouraged to think about how non-privacy teams think about assessments, such as AI, security, product readiness/IT, and businesses operations.

IAF’s multi-dimensional weighing is unique. It factors in as many stakeholders, benefits, and risks as are relevant to the processing being assessed. It is capable of weighing each of the stakeholders vis-à-vis each of the other factors. It can demonstrate the results mathematically or pictorially or both. It can be used to supplement the required narrative response.

The RDPA required by the Regulations is used only when High Risk Processing is conducted. Because of the IAF’s additions to the RDPA, the IAF version of the RDPA can assess Artificial Intelligence (AI) where AI goes beyond ADMT. It increasingly seems likely these High-Risk Processing scenarios also will involve AI, thus supporting their inclusion in a RDPA. The AI or Algorithmic aspects of these laws make the resulting assessment and assessment process more complex – requiring larger cross-organization collaboration and alignment.

The weighing of the risks and benefits to the numerous stakeholders will be worthwhile only if it done competently and with integrity. The IAF version of the RDPA enables business to weigh the risks and benefits of AI competently and with integrity. Organizations may find it challenging to identify, describe and assess impacts to all the relevant stakeholders, an effort that is no longer optional. Notably, the Demonstrable Assessments for U.S. State Privacy Laws Project dovetails with the IAF’s Project on Legitimate Interest for an AI World.

Convening multistakeholder sessions like this will be crucial for business and regulators as the shift from Accountability to Demonstrable Accountability goes global.

Related Articles