The vast amount of data made possible and accessible through today’s information technologies, and the ever-increasing analytical capabilities of this data, are unlocking tremendous insights that are enabling new solutions to health challenges, business models, personalization and benefits to individuals and society. At the same time, new risks to individuals can be created. Against this backdrop, policy makers and regulators are wrestling with how to apply current policy models to achieve the dual objectives of data protection and beneficial uses of data.

One of the market pressures emerging is a call to not only have data processed in a legal manner but also in a fair and just manner. The word “ethics” or the phrase “ethical data processing” is in vogue. Yet, today, we lack a common framework to decide both what might be considered ethical but as important how an ethical approach would be implemented. In their article relative to building trust, Jack Balkin and Jonathan Zittrain posit: “To protect individual privacy rights, we’ve developed the idea of “information fiduciaries.” In the law, a fiduciary is a person or business with an obligation to act in a trustworthy manner in the interest of another. However, what would be acting in a trustworthy fashion look like? It is an interesting approach that also illustrates the translation over time of ethical models into not just law but also commonly accepted practice (e.g., doctors and the Hippocratic oath).

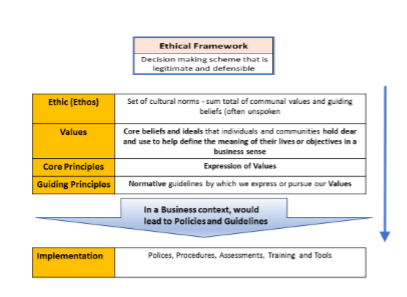

So, while we do not have a lingua franca, privacy and data protection enforcement agencies are increasingly asking companies to understand the ethical issues that are raised, for individuals, groups of individuals or the broader society, when complex data processing takes place. These issues go beyond explicit legal and contractual requirements to the question of whether processing is fair and just, not just legal. Translating ethical norms and values based uses of data into internal policies, mechanisms, and obligations that can be codified into operational guidance and processes, and people-centered thinking, is a challenge for many organizations. Even before this translation stage, it is key to recognize the word “ethics” or the phrase “ethical approach” does not exist in many privacy or data protection laws. However, synonyms like “fairness” exist today, will be stronger under the EU General Data Protection Regulation and are increasingly being looked at by global regulators. An ethical framework is a careful

articulation of an “ethos” or set of norms and guiding beliefs that is expressed in terms of explicitly stated values (or core principles) and are made actionable in guiding principles.

Today, organizations with mature privacy programs have internal policies that cover their legal requirements as well as requirements that go beyond the law. Examples include industry standards or positions that an organization has chosen to take for competitive reasons. The policies are usually the basis for operational guidance and mechanisms to put such guidance into place.

While privacy law may be clear, newer requirements, such as assessment processes that address fair and just processing and the impact to individuals, are less so. Organizations are challenged to translate ethical norms for data use into values and principles that become policy and ultimately operational guidance, which includes data processing assessments. Such guidance can serve as guiderails for business units that need to meet ethical standards for data use that go beyond privacy.

While IAF has defined a broad ethical framework as part of the Big Data Ethics Initiative, there is currently a gap in the guidance, specifically the translation of this ethical framework into values and action principles that an organization can express as internal Policy. This is a key connection point in the path to operational guidance such as a Comprehensive Data Impact Assessment (which can include privacy impact assessments and data protection impact assessments) developed as part of the IAF’s Effective Data Protection Governance Project that incorporates ethical data use objectives. This translation step also helps organizations establish the ultimate guiderails they want to use.

As a parallel example, many organizations have standards of (business) conduct. These are often developed or start off with a describable set of Values that are then codified into a set of Principles and ultimately into Policy which serves as the means to communicate to employees their expected behavior. In short, the “Principle” often serves as a key bridge between Values and Policy, thereby creating a meaningful framework that can then be operationalized in the organization.

What is needed to advance this dialogue is a starting point for what an “ethical framework” might look like and how the various layers or levels might be described. In a pictorial model, such a framework could look like this:

Key to the ethical framework is a starting point for what the Principle (Core and Guiding) layer could look like. Below is an example of what this layer might consist of. It was developed using a combination of the IAF’s Big Data and Ethical Values, the AI principles/values, How to Hold Algorithms Accountable from an MIT Technology Review and “Principles for Algorithmic Transparency and Accountability” from the ACM. They are written in “neutral” language as it is envisioned organizations would adapt them to fit their own environments as well as potentially translate them for external communication as they see fit. They go beyond what are legal requirements.

Ethical Data Use Core and Guiding Principles

- Beneficial –

- Uses of data should be proportional in providing benefits and value to individual users of the product or service. While the focus should be on the individual, benefits may also be accrued at a higher level, such as groups of individuals and even society.

- Where a data use has a potential impact on individual(s), the benefit should be defined and assessed against potential risks this use might create.

- Where data use does not impact an individual, risks, such as adequately protecting the data and reducing the identifiability of an individual, should be identified.

- Once all risks are identified, appropriate ways to mitigate these risks should be implemented.

- Fair, Respectful, and Just

- The use of data should be viewed by the reasonable individual as consistent, fair and respectful.

- Data use should support the value of human dignity – that individuals have an innate right to be valued, respected, and to receive ethical treatment. Human dignity goes beyond individual autonomy to interests such as better health and education.

- Entities should assess data and data use against inadvertent, inappropriate bias, or labeling that may have an impact on reputation or the potential to be viewed as discriminatory by individual(s).

- The accuracy and relevancy of data and algorithms used in decision making should be regularly reviewed to reduce errors and uncertainty.

- Algorithms should be auditable and be monitored and evaluated for discriminatory impacts.

- Data should be used consistent with the ethical values of the entity.

- The least data intensive processing should be utilized to effectively meet the data processing objectives.

- Transparent and Autonomous Protection (engagement and participation)

- As part of the dignity value, entities should always take steps to be transparent about their use of data. Proprietary processes may be protected, but not at the expense of transparency about substantive uses.

- Decisions made and used about an individual should be explainable.

- Dignity also means providing individuals and users appropriate and meaningful engagement and control over uses of data that impact them.

- Accountability and Redress Provision

- Entities are accountable for their use of data to meet legal requirements and should be accountable for using data consistent with the principles of Beneficial, Fair, Respectful & Just and Transparent & Autonomous Protection. They should stand ready to demonstrate the soundness of their accountability processes to those entities that oversee them.

- They should have accessible redress systems available

- Individuals and users should always have the ability to question the use of data that impacts them and to challenge situations where use is not consistent with the core principles of the entity.

The IAF believes it is important to have a lingua franca that enables a broad dialogue around not just how fair data processing is considered but also how an ethical framework helps implement the resulting values and principles.

Let us know what you think.

Related Articles